Anyone who has been watching the AI space this year, even peripherally, will have noticed the flaming hot story of the year—ChatGPT and related chatbot applications. These AI applications are essentially deep machine learning models that are trained on hundreds of gigabytes of text and that can provide detailed, grammatically correct, and “mostly accurate” text responses to user inputs (questions, requests, or queries, which are called prompts). Specifically, these are LLMs—large language models. It is imperative, not an option, for organizations (and for most individuals) to be aware of what is going on here—not only because it is all over the news, but because it could affect your future self.

When I said “mostly accurate,” I meant that sometimes the ChatGPT responses go way off target—people refer to these as “hallucinations,” which is basically a reflection of the statistical basis of the models (see below)—the application will generate some plausible-sounding, grammatically correct statements that are complete falsehoods, such as “Leonardo da Vinci painted the Mona Lisa in 1815” (which is a real example of an observed ChatGPT hallucination).

I tested ChatGPT with my own account, and I was impressed with the results. I prompted it with various requests, including: Write a short story on a specific topic, provide a layperson’s explanations of some complex deep machine learning concepts, create a lesson plan to learn a tough subject, create an outline for a blog on a particular topic (no, not this one), and provide some financial advice on particular investments (no, it did not provide specific advice, but it did offer warnings like NFA “Not Financial Advice” and DYOR “Do Your Own Research”). You can find my results on my Medium blog site.

LLMs are so responsive and grammatically correct (even over many paragraphs of text) that some people worry that it is sentient. Guess what? It isn’t. It is merely a very large statistical model that provides the most likely sequence of words in response to a prompt. It is effectively a galaxy-sized statistically rich version of text autocomplete on your smartphone’s text messaging app, which already delivers some highly probable guesses for the missing words in a text message like this one: “Due to a client deadline, I will be working late at the ____ this ____, so I will be home late for ____.” LLMs can respond to much more complex (but well-posed) prompts, such as lesson plans for education, content for a business presentation, code for a software task, workflow steps for an IT project, and much more.

In order to help people to create well-posed prompts, the new discipline of prompt engineering has arisen. It’s not hard to find many online guides to prompt engineering, including guides for very specific industries, business tasks, workplace applications, and context-dependent scenarios. You don’t need prompt engineering to find those guides—a simple web search should do the trick. And guess what? When web search engines were first created, it took a while for us to learn how to submit well-posed keyword searches. That scenario is being played out again with ChatGPT and prompt engineering, but now our queries are aimed at a much more language-based, AI-powered, statistically rich application. If you understand Bayes’ Theorem and Bayesian statistics, then you will understand me when I say that we are talking here about an enormously more enriched set of priors, likelihoods, and evidence to feed the LLMs—so, it should not be surprising that the posteriors are shockingly good for large text outputs (most of the time).

LLMs are a subset of the deep learning field of natural language processing (NLP), which includes natural language understanding (NLU) and natural language generation (NLG). Think of chatbots and you get the idea, just expanded to a much, much larger domain of AI-based conversation.

Computer vision (CV) is another subset of deep learning, specifically aimed at object/pattern detection, recognition, and classification in images (including still images and video sequences). ChatGPT and LLMs are examples of generative AI using NLP for text generation. Stable Diffusion, Midjourney, and Dall-E are examples of generative AI using CV for image generation. Oh, by the way, I asked the generative AI at Stable Diffusion to create some images to go with my short story (which you can find on my Medium blog).

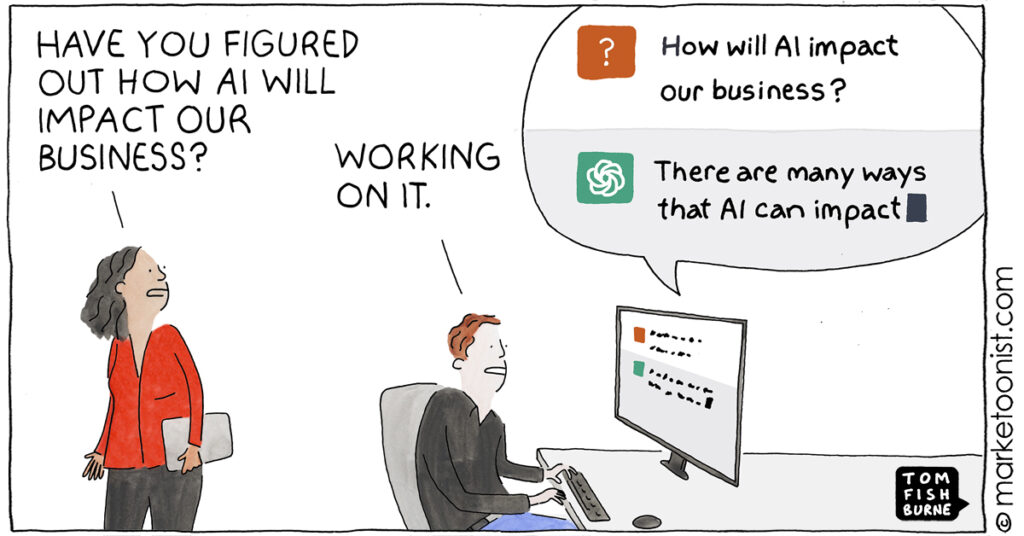

Beyond the individual examples of generative AI (and its components, ChatGPT, Stable Diffusion, etc.) that we can all experiment with, the applications in the enterprise can be tremendously impactful and transformative for organizations and the future of work. Those next chapters in the story are being written right now.

Continue reading about Enterprise AI in these posts: