The phrase “Big Data” refers to a set of serious analytical challenges that arise when the data increase in quantity, real-time speed, and complexity. The three V’s of big data (Volume, Velocity, and Variety) are now well known and well worn. Their familiarity and frequent association with “big data hype” may numb us to the important data challenges that they are meant to represent. These three characterizations have their counterparts in tools and technologies. For example, Hadoop (Apache’s open source implementation of the MapReduce programming model) is the technology du jour for management and analysis of high-volume data. The Hadoop Distributed File System (HDFS) is the file system for big data storage and access in Hadoop clusters. Apache Spark is a computing framework (built on HDFS) for fast processing of high-velocity data.

But, what about high-variety data? The storage and management challenges of such data are already addressed (see above), but the real challenge is in performing effective and efficient statistical modeling, data mining, and discovery across high-dimensional (complex) data sets. Software tools like Soft10 Inc.‘s “automatic statistician” Dr. Mo are designed to address that specific challenge.

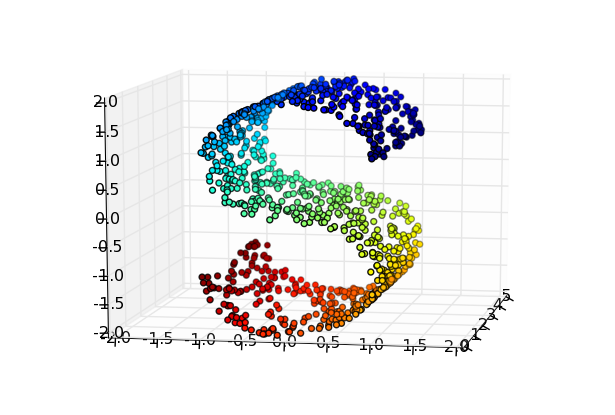

When considering complex (high-variety) data, it is important to note that even relatively small-volume data sets can pose huge challenges to modeling, mining, and analysis algorithms and tools. For example, consider a gigabyte data table with a billion entries. If those entries correspond to 500 million rows and 2 columns, then some relatively simple “textbook” techniques can be applied: e.g., correlation analysis, regression analysis, Naïve Bayes, etc. However, if those entries correspond to one million rows and 1000 columns, then the complexity of the data analysis explodes exponentially.

It is not hard to find data sets that are at least this complex, if not much worse. For example, the human genome consists of 3 billion base pairs (of just four bases: A, C, G, T) – the number of possible sequences of length 3 billion that can be formed from just four items is 4 to the power of 3 billion (limited of course by various genetic constraints). Another example will be the astronomical database to be obtained in the 10-year survey of the sky by the Large Synoptic Survey Telescope (lsst.org) – the final source table will consist of approximately 20 trillion rows and over 200 columns of scientific information per source. Analyses of all possible combinations of these scientific parameters (to discover new correlations, patterns, associations, clusters, etc.) would be prohibitive.

The combinatorial theorem in mathematics tells us that there are (2^N – 1) possible combinations of N things. For example, a statistical analysis of a data table with just 3 columns (A,B,C) would require 7 distinct analyses (statistical models) of the behavior of the data: A, B, C, A with B, B with C, A with C, and with all three taken at once. A data table with 5 columns would require 31 distinct analyses; and a table with 25 columns would require over 33 million distinct analyses. My calculator tells me that the number of distinct combinations of 200 variables is greater than 10^60. This extraordinarily rapid growth rate is called the “combinatorial explosion”. While no software package could ever perform that many variations of high-dimensional data analysis, it is common to focus on joint combinations of fewer parameters. Even pairs, triples, and similar small-number combinations can have significant correlation and covariance, consequently yielding important discoveries.

Therefore, in order to meet the challenge of big data complexity (high variety), fast modeling technology is needed. Such tools provide big benefits to both statisticians and non-statisticians. These benefits multiply favorably when the technology can automatically build and test a large number of models (different combinations of parameters) in parallel. Furthermore, the power of the technology is even more enhanced when it ranks the output models and parameter selection in order of significance and correlation strength. Soft10’s “automatic statistician” Dr. Mo does these things and more. Dr. Mo models complex high-dimensional data both row-wise and column-wise. Dr. Mo produces high-accuracy predictions. Dr. Mo’s proprietary multi-model technology is a powerful tool for predictive modeling and analytics across many application domains, including medicine and health, finance, customer analytics, target marketing, nonprofits, membership services, and more. Check out Dr. Mo at http://soft10ware.com/ and read more here: http://soft10ware.com/big-data-complexity-requires-fast-modeling-technology/

Kirk Borne is a member of the Soft10, Inc. Board of Advisors.

Follow Kirk Borne on Twitter @KirkDBorne