Decades (at least) of business analytics writings have focused on the power, perspicacity, value, and validity in deploying predictive and prescriptive analytics for business forecasting and optimization, respectively. These are primarily forward-looking actionable (proactive) applications.

There are other dimensions of analytics that tend to focus on hindsight for business reporting and causal analysis – these are descriptive and diagnostic analytics, respectively, which are primarily reactive applications, mostly explanatory and investigatory, not necessarily actionable.

In the world of data there are other types of nuanced applications of business analytics that are also actionable – perhaps these are not too different from predictive and prescriptive, but their significance, value, and implementation can be explained and justified differently. Before we dive into these additional types of analytics applications, let us first consider a little pedagogical exercise with two simple evidence-based inferences.

(a) In essentially 100% of cases where an automobile is involved in an accident, the automobile had four wheels on the car prior to the accident.

(b) In 100% of divorce cases, the divorcing couple was married prior to the divorce.

What is the point of those obvious statistical inferences? The point is that the 100% association between the event and the preceding condition has no special predictive or prescriptive power. Hence, prior knowledge of these 100% associations does not offer any actionable value. In statistical terms, the joint probability of event Y and condition X co-occurring, designated P(X,Y), is essentially the probability P(Y) of event Y occurring. The probability of the condition X occurring, P(X), is irrelevant since the existence of the precondition X is implicitly present by default.

Okay, those examples represent two remarkably uninteresting cases. Even when similar sorts of inferences occur in a business context, they have essentially zero value. How do predictive and prescriptive analytics fit into this statistical framework?

Using the same statistical terminology, the conditional probability P(Y|X) (the probability of Y occurring, given the presence of precondition X) is an expression of predictive analytics. By exploring and analyzing the business data, analysts and data scientists can search for and uncover such predictive relationships. This is predictive power discovery. Another way of saying this is: given observed data X, we can predict some outcome Y. Or more simply: given X, find Y.

Similarly (actually, conversely), we can use the conditional probability P(X|Y) (which is the probability that the precondition X exists, given the existence of outcome Y) as an expression of prescriptive analytics. How does that work in practice? By exploring and analyzing business data, analysts and data scientists can search for and uncover the conditions (causal factors) that have led to different outcomes. So, if the business wants to optimize some outcome Y, then data analysts will be tasked with finding the conditions X that must be implemented to achieve that desired outcome. This is prescriptive power discovery. Another way of saying this is: given some desired optimal outcome Y, what conditions X should we put in place. Or more simply: given Y, find X. Note how this simple mathematical expression of prescriptive analytics is exactly the opposite of our previous expression of predictive analytics (given X, find Y).

Here are a few business examples of this type of prescriptive analytics: Which marketing campaign is most efficient and effective (has best ROI) in optimizing sales? Which environmental factors during manufacturing, packaging, or shipping lead to reduced product returns? Which pricing strategies lead to the best business revenue? What equipment maintenance schedule minimizes failures, downtime (mean time to recovery), and overall maintenance costs?

Now that we have described predictive and prescriptive analytics in detail, what is there left? What are the three types of actionable (and valuable) business analytics applications that are not called predictive or prescriptive? They are sentinel, precursor, and cognitive analytics. Let’s define what these are.

- Sentinel Analytics – in common usage, the sentinel is the person on the guard station who is charged with watching for significant incoming or emergent activity. In practice, all activity is being observed and a decision is made as to whether any particular activity requires some sort of triage: sounding an alarm, or sending an alert to decision-makers, or doing nothing.

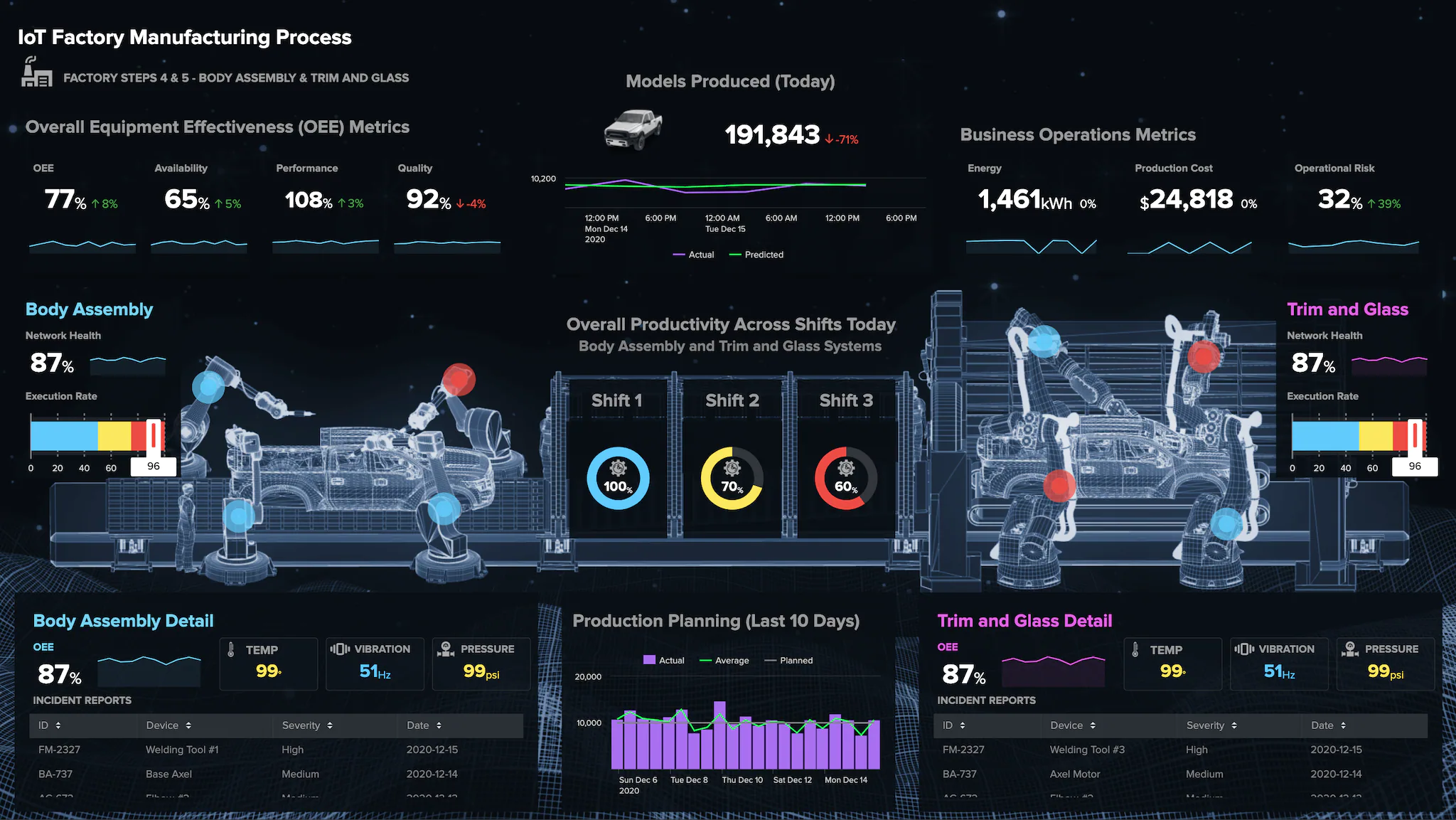

- In the enterprise, sentinel analytics is most timely and beneficial when applied to real-time, dynamic data streams and time-critical decisions. For example, sensors (including internet of things devices and APIs on data networks) can be deployed with logic (analytics, statistical, and/or machine learning algorithms) to monitor and “watch” business systems and processes for emerging patterns, trends, behaviors, unusual operating modes, and anomalies that might be indicators of activities that require business attention, decisions, and/or action.

- Precursor Analytics – in common usage, precursors are the early-warning indicators (harbingers, forerunners) of something else more serious or catastrophic that is about to come. We occasionally hear about earthquake precursors (increased levels of radon in groundwater), tidal wave precursors (a deep ocean earthquake), and cyber-attack precursors (phishing incidents). Precursor analytics is related to sentinel analytics. The latter (sentinel) is associated primarily with “watching” the data for interesting patterns that might require action, while precursor analytics is associated primarily with training the business systems to quickly identify those specific “learned” patterns and events that are known to be associated with high-risk events, thus requiring timely attention, intervention, and remediation.

- In these applications, the data science involvement includes both the “learning” of the most significant patterns to alert on and the improvement of their models (logic) to minimize false positives and false negatives. The analytics triage is critical, to avoid alarm fatigue (sending too many unimportant alerts) and to avoid underreporting of important actionable events. One could say that sentinel analytics is more like unsupervised machine learning, while precursor analytics is more like supervised machine learning. That is not a totally clean separation and distinction, but it might help to clarify their different applications of data science.

- The counterexample to the supervised learning explanation of precursor analytics is a “black swan” event – a rare high-impact event that is difficult to predict under normal circumstances – such as the global pandemic, which led to the failure of many predictive models in business. Broken models are definitely disruptive to analytics applications and business operations. Paradoxically, the precursor was actually predictive in a disruptive anti-predictive sort of way, which brings us right back to P(Y|X), or maybe it should be stated as P(“not Y”|X) where X is the black swan event (i.e., the predicted outcome Y from existing models will not occur in this case). As such, the global pandemic serves as a warning (a harbinger of disruption) and consequently as a “training example” to businesses for any future black swans.

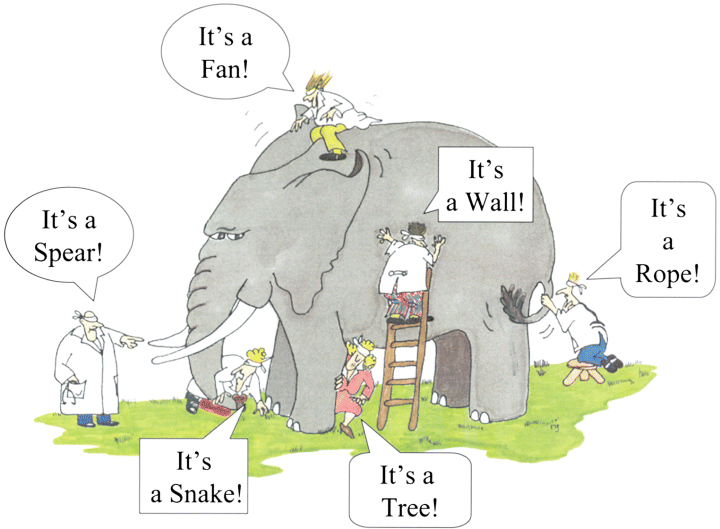

- Cognitive Analytics – this analytics mindset approach focuses on “surprise” discovery in data, using machine learning and AI to emulate and automate the cognitive abilities of humans. The goal is to discover novel, interesting, unexpected, and potentially valuable signals in the flood of streaming enterprise data. These may not be high-risk discoveries, but they could be high-reward discoveries. How does that resemble human cognitive abilities? Curiosity! Being curious about seeing something “funny” that you didn’t expect, thereby putting a “marker” in the data stream: “Look here! Pay attention! Ask questions about this!”

- Cognitive analytics is basically the opposite of descriptive analytics. In descriptive analytics, the task is to find answers to predetermined business questions (how much, how many, how often, who, where, when), whereas cognitive analytics is tasked with finding the business questions that should be asked. Descriptive: find the right answers in the data. Cognitive: find the right questions in the data. Cognitive analytics can then be viewed as a precursor to diagnostic analytics, which is the investigative stage of analytics that answers the questions raised by cognitive analytics (“Why did this happen?”, “Why are we seeing this pattern in our data?”, “What is the business impact of this trend, anomaly, behavior?”, “What is our next-best action as a result of this?”, “That’s funny! What is that?”).

None of these descriptions of the 3 “new” analytics applications are meant to declare that these are completely distinct and different from the “big 4” analytics applications that we have known for many years (Descriptive, Diagnostic, Predictive, Prescriptive). But the differences between the “big 4” and the “new 3” are in the nuanced business applications of these analytics in the enterprise and in the types of inferences that the data scientists are asked to derive from the business data.

Deploying these analytics in the cloud further expands their accessibility, democratization, enterprise-wide acceptance, broad advocacy, and ultimate business value. Blending automated analytics products (coming from the sentinel, precursor, and cognitive applications) with human-in-the-loop inquisitiveness, curiosity, creativity, out-of-the-box thinking, idea generation, and persistence can transform any organization into a data analytics powerhouse through an analytic culture revolution. This is more imperative than ever, as a global survey of analytics executives has revealed:

- “Companies have been working to become more data-driven for many years, with mixed results.”

- “Right now, the biggest challenge for organizations working on their data strategy might not have to do with technology at all.”

- “Corporate chief data, information, and analytics executives reported that cultural change is the most critical business imperative.”

- “Just 26.5% of organizations report having established a data-driven organization.”

- “91.9% of executives cite cultural obstacles as the greatest barrier to becoming data driven.”

- Reference: https://hbr.org/2022/02/why-becoming-a-data-driven-organization-is-so-hard

Where do organizations get help to overcome these challenges? Microsoft delivers what its clients need to help them grow their top line with cloud-based analytics. Microsoft’s cloud-based analytics products and services propel business insights, innovation, and value from enterprise data, with all of the dimensions of analytics applications brought into the game. Specifically, cloud analytics (accessing and inferencing on multiple diverse business datasets across business units) for a wide variety of enterprise applications can sharpen the workforce’s focus on value and growth, including: forward-looking insights through predictive, sentinel, and precursor analytics; novel recommendations; rich customer engagement; analytic product innovation; resilience through prescriptive analytics; surprise discovery in data, asking the right questions, and exploring the most insightful lines of inquiry through cognitive analytics; and more.

Microsoft Azure Cloud extends ease-of-access analytics to all, delivers increased speed to deployment, provides leading security, compliance, and governance – with price performance for any organization. Whether organizations are seeking scalability in their enterprise data systems, advanced analytics capabilities (including the “big 4” and the “new 3”), real-time analytics (essential value-drivers from streaming data, including IoT, network logs, online customer interactions, supply chain, etc.), and the best in machine learning model-building and deployment services, Microsoft Azure Cloud has you covered. To learn more about it, go to https://azure.microsoft.com/en-us/solutions/cloud-scale-analytics and bring actionable business analytics to higher levels of proficiency and productivity across your organization.