In this post, we will examine ways that your organization can separate useful content into separate categories that amplify your own staff’s performance. Before we start, I have a few questions for you.

What

attributes of your organization’s strategies can you attribute to successful

outcomes? How long do you deliberate before taking specific deliberate actions?

Do you converse with your employees about decisions that might be the converse

of what they would expect? Is a process modification that saves a minute in

someone’s workday considered too minute for consideration? Do you present your

employees with a present for their innovative ideas? Do you perfect your plans

in anticipation of perfect outcomes? Or do you project foregone conclusions on

a project before it is completed?

If

you have good answers to these questions, that is awesome! I would not contest

any of your answers since this is not a contest. In fact, this is actually

something quite different. Before you combine all these questions in a heap and

thresh them in a combine, and before you buffet me with a buffet of skeptical

remarks, stick with me and let me explain. Do not close the door on me when I

am so close to giving you an explanation.

What

you have just experienced is a plethora of heteronyms. Heteronyms are words

that are spelled identically but have different meanings when pronounced

differently. If you include the title of this blog, you were just presented

with 13 examples of heteronyms in the preceding paragraphs. Can you find them

all?

Seriously

now, what do these word games have to do with content strategy? I would say

that they have a great deal to do with it. Specifically, in the modern era of

massive data collections and exploding content repositories, we can no longer

simply rely on keyword searches to be sufficient. In the case of a heteronym, a

keyword search would return both uses of the word, even though their meanings

are quite different. In “information retrieval” language, we would say that we

have high RECALL, but low PRECISION. In other words, we can find most occurrences

of the word (recall), but not all the results correspond to the meaning of our

search (precision). That is no longer good enough when the volume is so high.

The

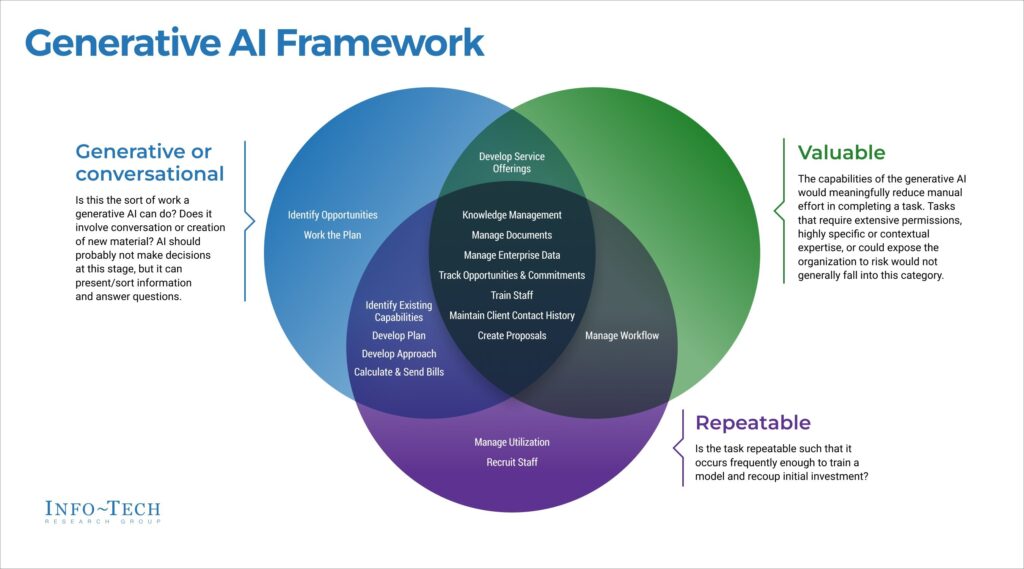

key to success is to start enhancing and augmenting content management systems

(CMS) with additional features: semantic content and context. This is

accomplished through tags, annotations, and metadata (TAM). TAM management,

like content management, begins with business strategy.

Strategic content management focusses on business outcomes, business process improvement, efficiency (precision – i.e., “did I find only the content that I need without a lot of noise?”), and effectiveness (recall – i.e., “did I find all the content that I need?”). Just because people can request a needle in the haystack, it is not a good thing to deliver the whole haystack that contains that needle. Clearly, such a content delivery system is not good for business productivity. So, there must be a strategy regarding who, what, when, where, why, and how is the organization’s content to be indexed, stored, accessed, delivered, used, and documented. The content strategy should emulate a digital library strategy. Labeling, indexing, ease of discovery, and ease of access are essential if end-users are to find and benefit from the collection.

My favorite approach to TAM creation and to modern data management in general is AI and machine learning (ML). That is, use AI and machine learning techniques on digital content (databases, documents, images, videos, press releases, forms, web content, social network posts, etc.) to infer topics, trends, sentiment, context, content, named entity identification, numerical content extraction (including the units on those numbers), and negations. Do not forget the negations. A document that states “this form should not be used for XYZ” is exactly the opposite of a document that states “this form must be used for XYZ”. Similarly, a social media post that states “Yes. I believe that this product is good” is quite different from a post that states “Yeah, sure. I believe that this product is good. LOL.”

Contextual TAM enhances a CMS with knowledge-driven search and retrieval, not just keyword-driven. Contextual TAM includes semantic TAM, taxonomic indexing, and even usage-based tags (digital breadcrumbs of the users of specific pieces of content, including the key words and phrases that people used to describe the content in their own reports). Adding these to your organization’s content makes the CMS semantically searchable and usable. That’s far more laser-focused (high-precision) than keyword search.

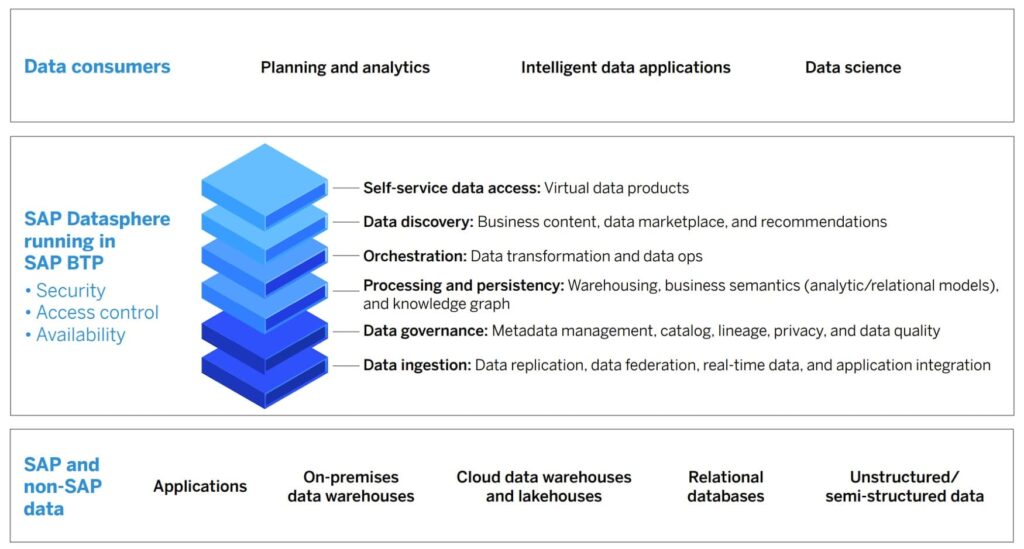

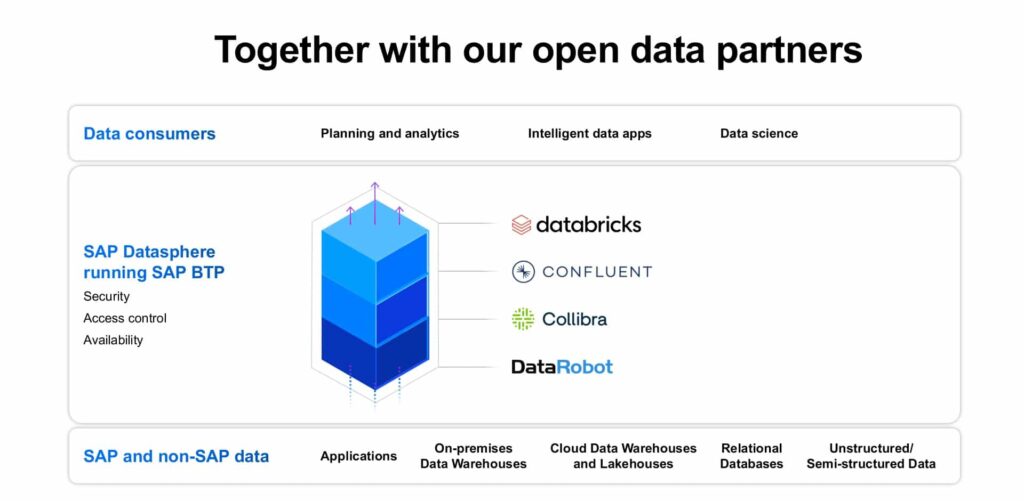

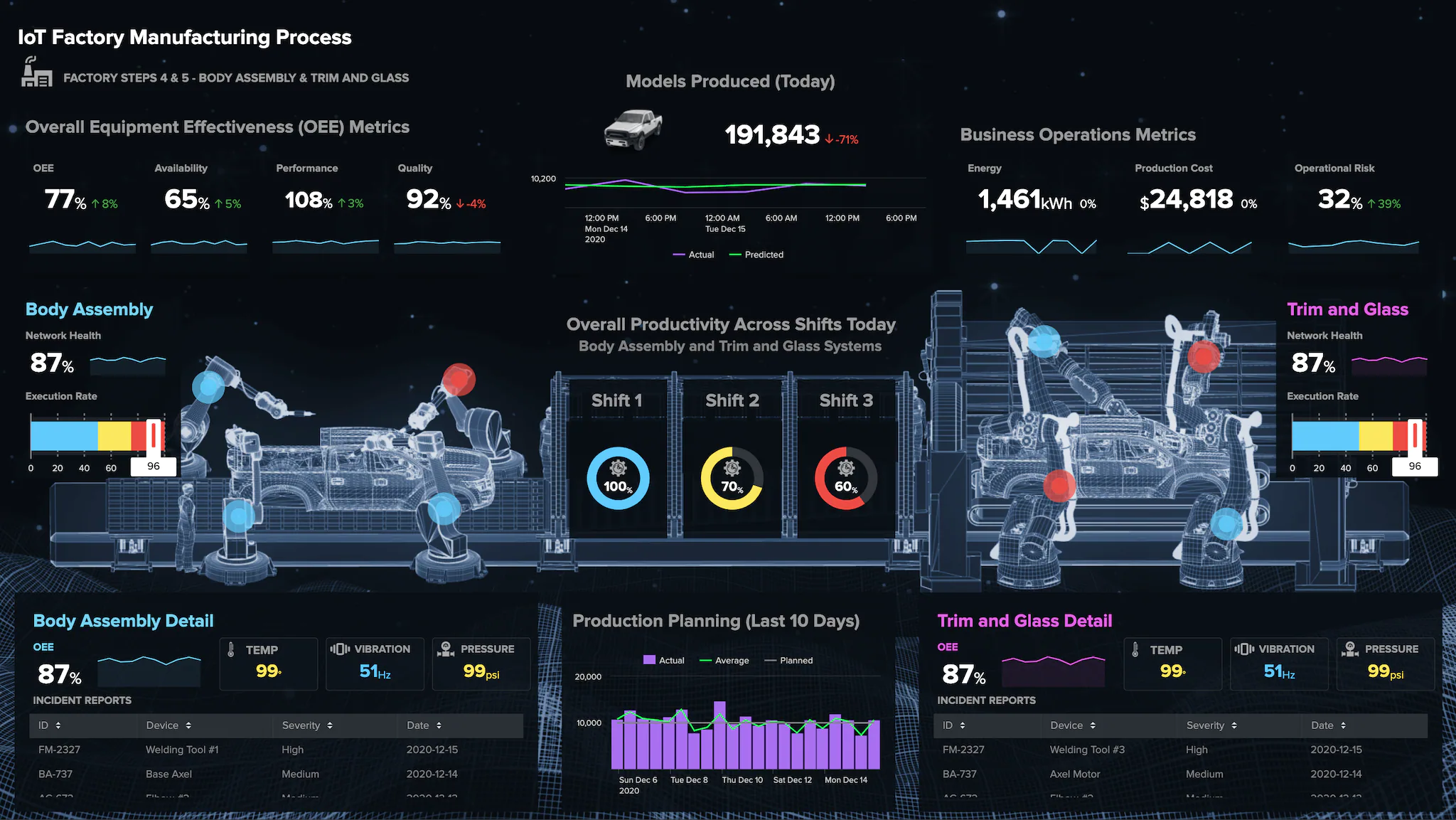

One

type of implementation of a content strategy that is specific to data

collections are data catalogs. Data catalogs are very useful and important.

They become even more useful and valuable if they include granular search

capabilities. For example, the end-user may only need the piece of the dataset

that has the content that their task requires, versus being delivered the full

dataset. Tagging and annotating those subcomponents and subsets (i.e.,

granules) of the data collection for fast search, access, and retrieval is also

important for efficient orchestration and delivery of the data that fuels AI,

automation, and machine learning operations.

One

way to describe this is “smart content” for intelligent digital business

operations. Smart content includes labeled (tagged, annotated) metadata (TAM).

These labels include content, context, uses, sources, and characterizations

(patterns, features) associated with the whole content and with individual

content granules. Labels can be learned through machine learning, or applied by

human experts, or proposed by non-experts when those labels represent cognitive

human-discovered patterns and features in the data. Labels can be learned and

applied in existing CMS, in massive streaming data, and in sensor data

(collected in devices at the “edge”).

Some specific tools and techniques that can be applied to CMS to generate smart content include these:

- Natural language understanding and natural language generation

- Topic modeling (including topic drift and topic emergence detection)

- Sentiment detection (including emotion detection)

- AI-generated and ML-inferred content and context

- Entity identification and extraction

- Numerical quantity extraction

- Automated structured (searchable) database generation from textual (unstructured) document collections (for example: Textual ETL).

Consequently, smart content thrives at the convergence of AI and content. Labels are curated and stored with the content, thus enabling curation, cataloguing (indexing), search, delivery, orchestration, and use of content and data in AI applications, including knowledge-driven decision-making and autonomous operations. Techniques that both enable (contribute to) and benefit from smart content are content discovery, machine learning, knowledge graphs, semantic linked data, semantic data integration, knowledge discovery, and knowledge management. Smart content thus meets the needs for digital business operations and autonomous (AI and intelligent automation) activities, which must devour streams of content and data – not just any content, but smart content – the right (semantically identified) content delivered at the right time in the right context.

The

four tactical steps in a smart content strategy include:

- Characterize and contextualize the patterns, events, and entities in the content collection with semantic (contextual) tags, annotation, and metadata (TAM).

- Collect, curate, and catalog (i.e., index) each TAM component to make it searchable, accessible, and reusable.

- Deliver the right content at the right time in the right context to the decision agent.

- Decide and act on the delivered insights and knowledge.

Remember,

do not be content with your current content management strategy. But discover

and deliver the perfect smart content that perfects your digital business outcomes.

Smart content strategy can save end-users countless minutes in a typical

workday, and that type of business process improvement certainly is not too

minute for consideration.